A year ago, almost nobody had heard of coding agents, and if they did, it was in the context of technologies that didn’t yet work.

What a difference a year makes.

Today, coding agents are all around us, touching on every aspect of the software development life cycle. Current coding agent products include Amazon Q Developer, Cline, Cursor, Devstral, GitHub Copilot, Google Jules, Lovable, OpenAI Codex, Solver, Sourcegraph Amp, Windsurf Cascade, and Zencoder.

In this article, we’ll survey what today’s coding agents can do and briefly examine each of these products.

What is an AI coding agent?

An AI agent is an autonomous tool to which a human can delegate a task. Coding agents have specialized capabilities having to do with the software development life cycle as well as specialized training in computer languages.

AI agents require reasoning, typically implemented with AI models. They require access to other programs, implemented with connectors. They benefit from memory, both short-term and long-term. Using multiple agents requires orchestration. Combining all of these things means implementing a run-time layer.

Allowing these different components to interact takes protocols. As of right now, MCP, or Model Context Protocol, is shaping up to be the dominant one. MCP is an open standard proposed by Anthropic that provides a universal way for AI models to access external data and tools, for example browsers, or any app with an API. Another important open protocol is Google’s Agent2Agent (A2A) protocol, which allows agents to connect with other agents and collaborate in teams.

IBM’s Agent Communication Protocol (ACP) is yet another open standard for agent interoperability. It defines a RESTful API supporting synchronous, asynchronous, and streaming interactions, and has an implementation in BeeAI.

In addition to models and protocols, agents require permissions to act on other programs and resources. Finally, agents need data, for multiple purposes.

As hinted above, there are special considerations for coding agents, beyond just training them on code. They need to be able to work with software repositories, understand entire projects, understand house styles, write unit tests (and, optionally, the other dozen or so kinds of tests), find the appropriate place to add new code, run code, test code, debug code, revise code based on testing, write commit messages, check in working code, generate pull requests, and so on.

There’s also a need for coding agents to allow humans to approve or disapprove proposed changes to the code base.

Amazon Q Developer

Last fall, Amazon Q Developer supported two agents, one for generating code (/dev) and one for transforming older Java projects to an updated Java version (/transform). In December 2024, Amazon added three more agents, one for generating documentation in code bases (/doc), one for performing code reviews to detect and resolve security and code quality issues (/review), and one for generating unit tests automatically and improving test coverage (/test).

Amazon Q Developer can supply five kinds of contexts to its prompt: a workspace, folders, files, selected code, and a saved prompt. These can be used whether or not agentic coding is enabled.

Amazon Q Developer currently offers five agents: /dev, /test, /review, /doc, and /transform.

Foundry

Cline

Cline is “a fully collaborative AI partner” that is open-source and extensible, Cline does more than code generation, supporting your developer workflow, keeping an eye on your environment from terminals and files to error logs, automatically detecting issues and applying fixes, and connecting with documents and databases using MCP integration. It has a long list of MCP plug-ins.

Cline supports a couple of dozen AI vendors, as long as you can supply API keys, but currently works best with Anthropic Claude. Unlike most VS Code AI plug-ins, Cline doesn’t watch your VS Code edit session and try to complete your code. Instead, you guide it in a chat window by giving it tasks to accomplish and responding to requests for clarification and permission.

Cline can generate whole projects and take actions on your behalf. Here it has created a new project folder and generated the HTML for a simple web page.

Foundry

Cursor

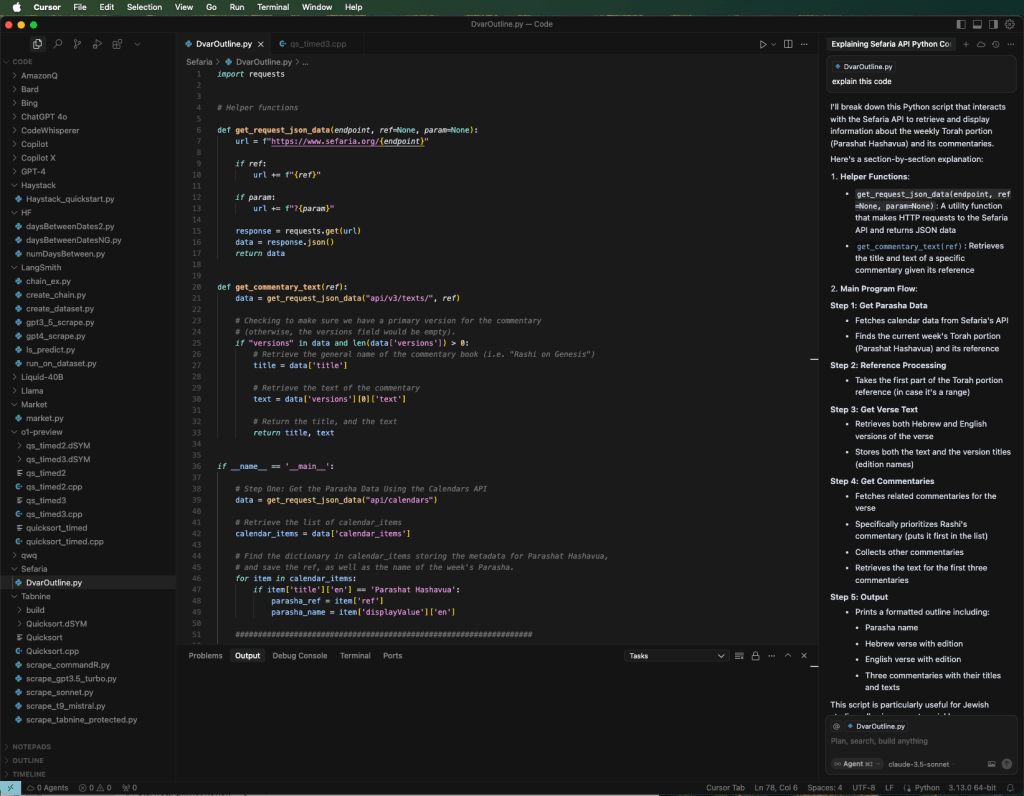

While many coding assistants are plug-ins to Visual Studio Code, Cursor is a fork of Visual Studio Code. When I wrote about Cursor in October 2024, I said that its major improvement over VS Code is its handling of code completion and chat. Agent mode was added later, and has continued to improve month by month.

The current chat mode choices in Cursor are Agent (the default), Ask (essentially read-only), Manual (precise code changes with explicit file targeting), and Background (agents in the cloud). The previous Composer mode has become Chat, and Yolo mode has been reduced to an Auto-run toggle in Agent mode.

Cursor’s agent mode can complete tasks end to end. Using custom retrieval models, Cursor can understand a code base. It can automatically write and run terminal commands, by default with your explicit approval. Cursor can detect lint errors automatically and apply fixes. Agent mode can run any MCP models you have installed.

Background agents are asynchronous agents that can edit and run your code in a remote environment. At any point, you can view their status, send a follow-up, or take over. Background agents and privacy mode, in which none of your code will ever be stored by Cursor or any third-party, are mutually exclusive.

Max mode, enabled by a switch in the model picker, allows models to use larger context windows, make more tool calls without confirmation, and read more lines from files. Max mode pricing is calculated based on token usage.

Cursor’s Agent mode can understand a code base, write and run terminal commands, detect and fix lint errors, and run any MCP models you have installed.

Foundry

Devstral

Devstral is an open-source, agentic LLM for software engineering tasks, built under a collaboration between Mistral AI and All Hands AI and released in May 2025. It claims to outperform all open-source models on SWE-Bench Verified by a large margin, and achieve substantially better performance than a number of closed-source alternatives.

Devstral is supposedly light enough to run on a single Nvidia GeForce RTX 4090 or a Mac with 32GB RAM. In fact, I was able to run it under Ollama on a Mac with 24GB RAM with acceptable speed.

I tested Devstral locally with the prompt “Write a C++ program that calculates pi to 20 digits.” Devstral’s first effort used the Gauss-Legendre algorithm, which is OK, but did the calculation with a C++ double type. I pointed that out: “Don’t you need to use multiple-precision floating-point computation to get 20 digit accuracy?” Devstral said “You are correct. The standard C++ double type typically provides about 15-17 decimal places of precision, so calculating Pi accurately up to 20 decimal places requires a library that supports arbitrary-precision arithmetic.”

Devstral then generated a program using the GMP (GNU Multiple Precision Arithmetic Library). That should work, but it’s long-winded, to say the least. I gave it a hint: “Wouldn’t MPFR make the code simpler?” It went with that, but persisted in using the Gauss-Legendre algorithm, again a bit long-winded. I gave it another hint: “Why not use mpfr_const_pi?” It took that hint and finally generated code I might have written myself.

I wondered if Devstral knew what MPFR is doing under the covers: “What algorithm does mpfr_const_pi use?” Instead of looking at the C++ code or the documentation, it gave me a BS answer, including “One commonly used algorithm for computing Pi with high precision is the **Chudnovsky algorithm**.” That’s true, but MPFR actually uses the Brent-Salamin formula, as documented in MPFR Algorithms. It’s possible that running Devstral in an environment that allowed for web searches might have helped it do better.

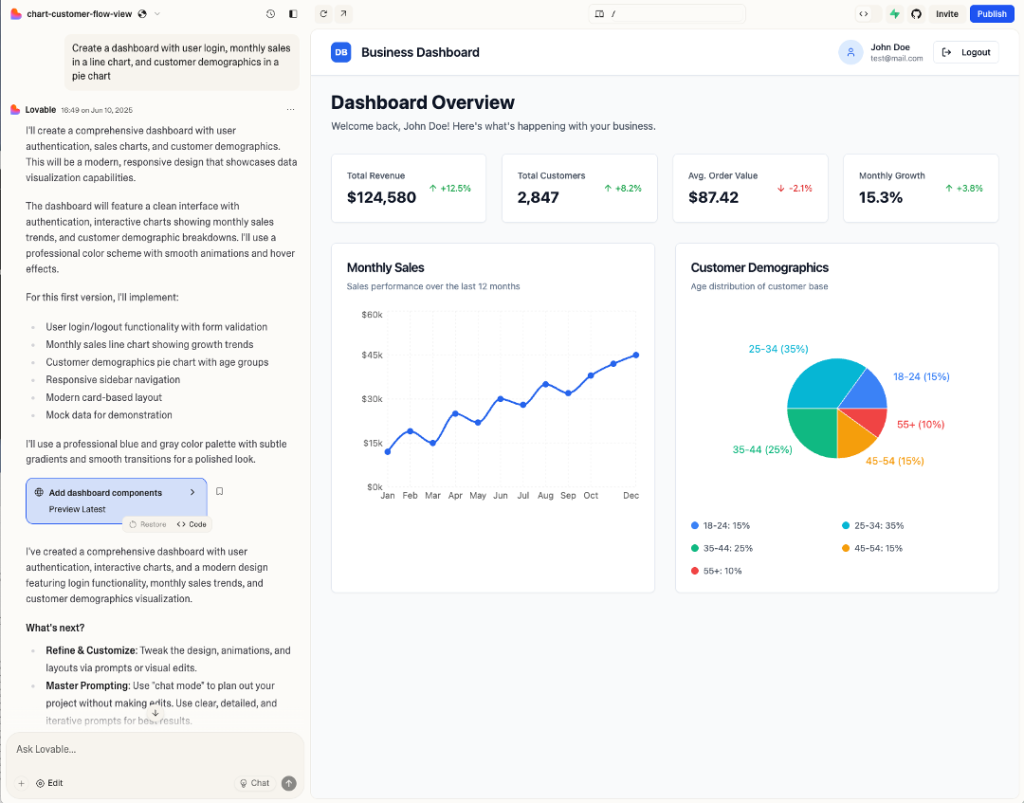

GitHub Copilot

The GitHub Copilot coding agent was released in May 2025 and demonstrated at Microsoft Build. The coding agent starts its work when you assign a GitHub issue to Copilot or prompt it in VS Code. The agent spins up a development environment powered by GitHub Actions. From there it pushes commits to a draft pull request, and you can track it through the agent session logs. The agent’s pull requests require human approval before any CI/CD workflows are run. Once Copilot is done, it will tag you for review and you can leave comments asking for it to make changes.

GitHub Copilot also has a code review agent, which has two modes. You can highlight a selection of code and ask for an “initial review,” or you can ask for a “deeper review” of all changes in a pull request. The code review agent will provide feedback in comments and suggest changes that you can implement with a click. If you like, you can configure a repository to automatically request a code review from Copilot for all new pull requests.

GitHub Copilot’s coding agent mode running in VS Code.

Foundry

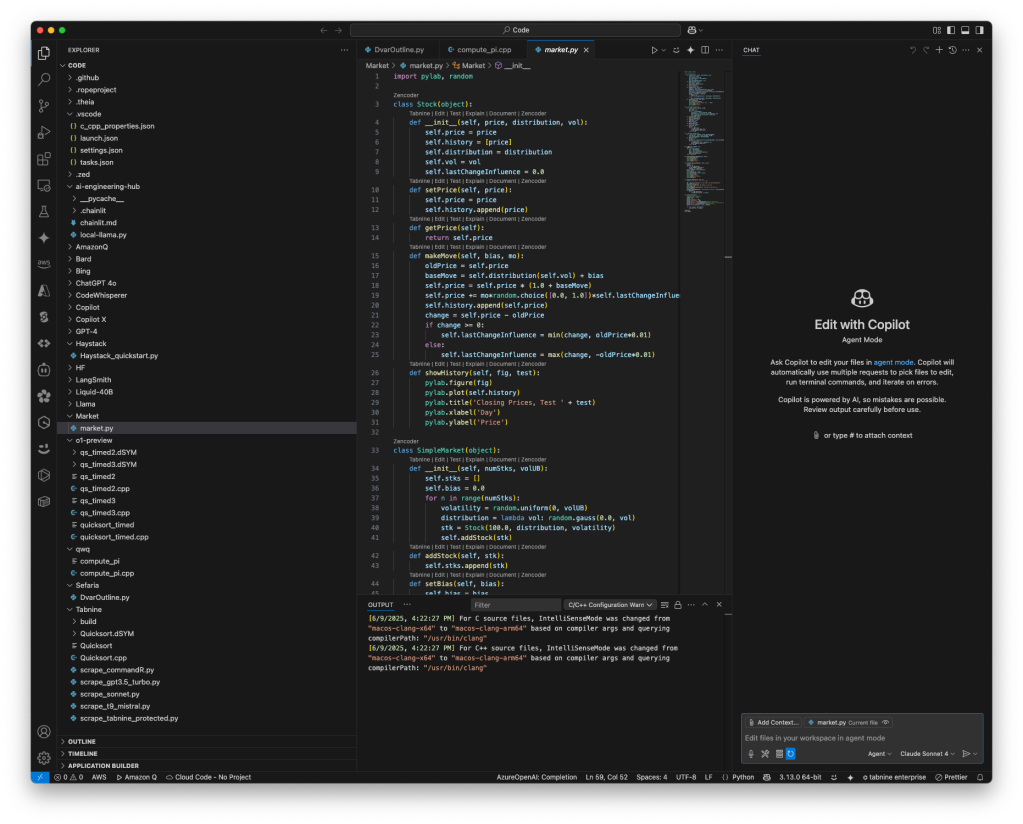

Google Jules

Jules is an experimental AI-powered agent that uses Gemini 2.0 to asynchronously perform Python and JavaScript coding tasks. You can install it in and run it from your GitHub repositories. This 90-second video explains how Jules works.

You can invoke Google Jules from your GitHub repos to fix bugs, add features, write docs, or almost anything for which you could write a pull request.

Foundry

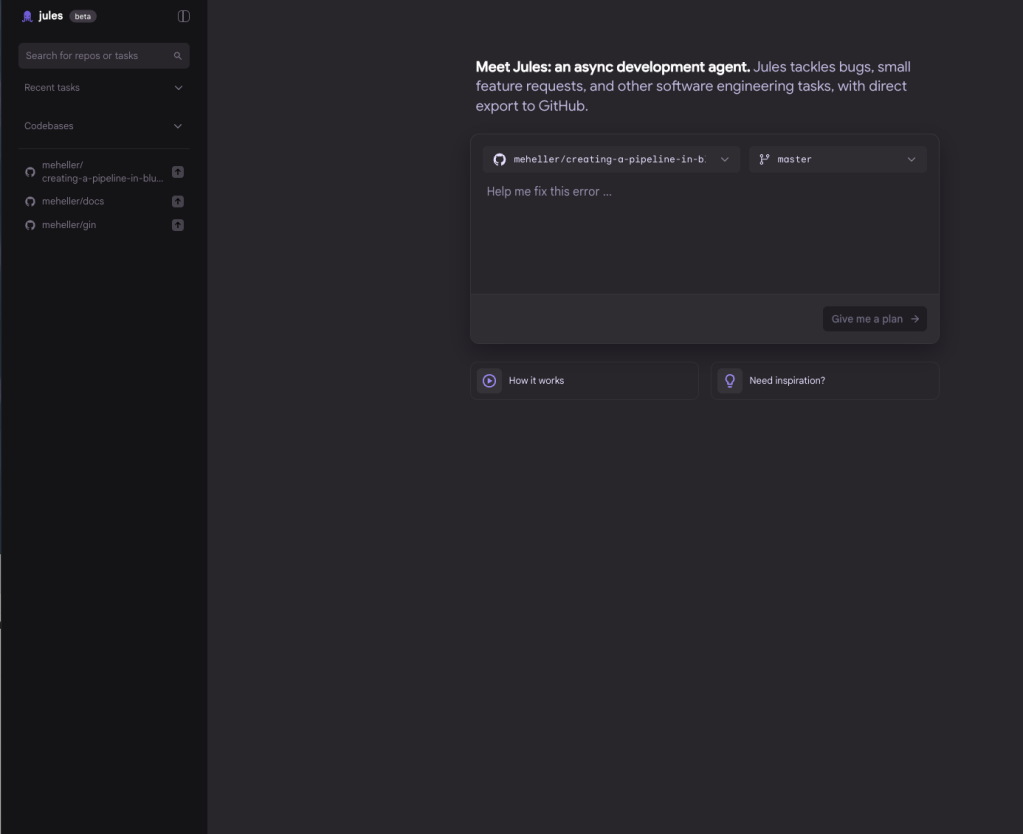

Lovable

Lovable is a vibe-coding website where users can create full-stack web applications without coding expertise by describing what they want in plain English. It includes AI coding tools, real-time collaboration (beta test), and project sharing.

Lovable has two AI modes, Edit and Chat. Chat mode is agentic and helps you plan. When you ask Chat to implement the plan, Lovable switches back to Edit mode and generates code.

In Code mode you can view and edit (with a paid plan) the full code repository backing your Lovable project in the Lovable project editor; it’s a React app. You can import designs from Figma using the Builder.io plug-in, as long as you structure your Figma file properly.

Lovable prototype app (right) generated from the prompt “Create a dashboard with user login, monthly sales in a line chart, and customer demographics in a pie chart” (left).

Foundry

OpenAI Codex

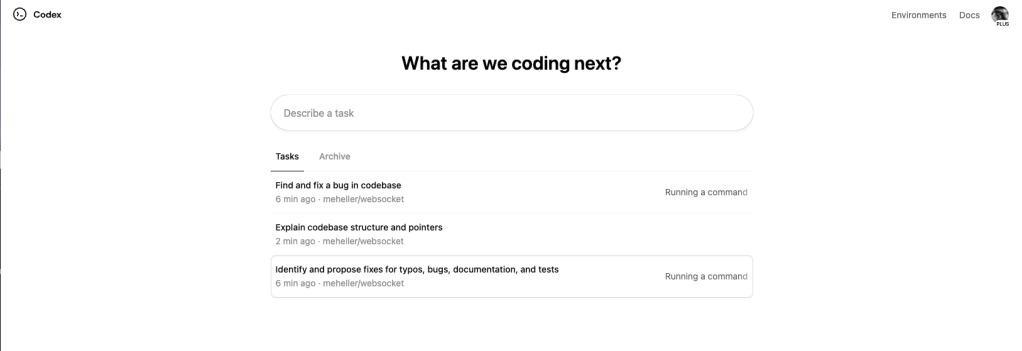

OpenAI Codex contains two components: Codex, which works with GitHub, and Codex CLI, which runs in a command line interface. Codex allows you to delegate tasks to a software engineering agent in the cloud. Codex CLI acts as a lightweight coding agent in your terminal.

Codex takes on many tasks in parallel, such as writing features, answering questions about a code base, running tests, and proposing pull requests for review. Each task runs in its own secure cloud sandbox, preloaded with your repository and an appropriate runtime environment.

OpenAI Codex main screen. Note that all three tasks ran simultaneously in separate virtual environments.

Foundry

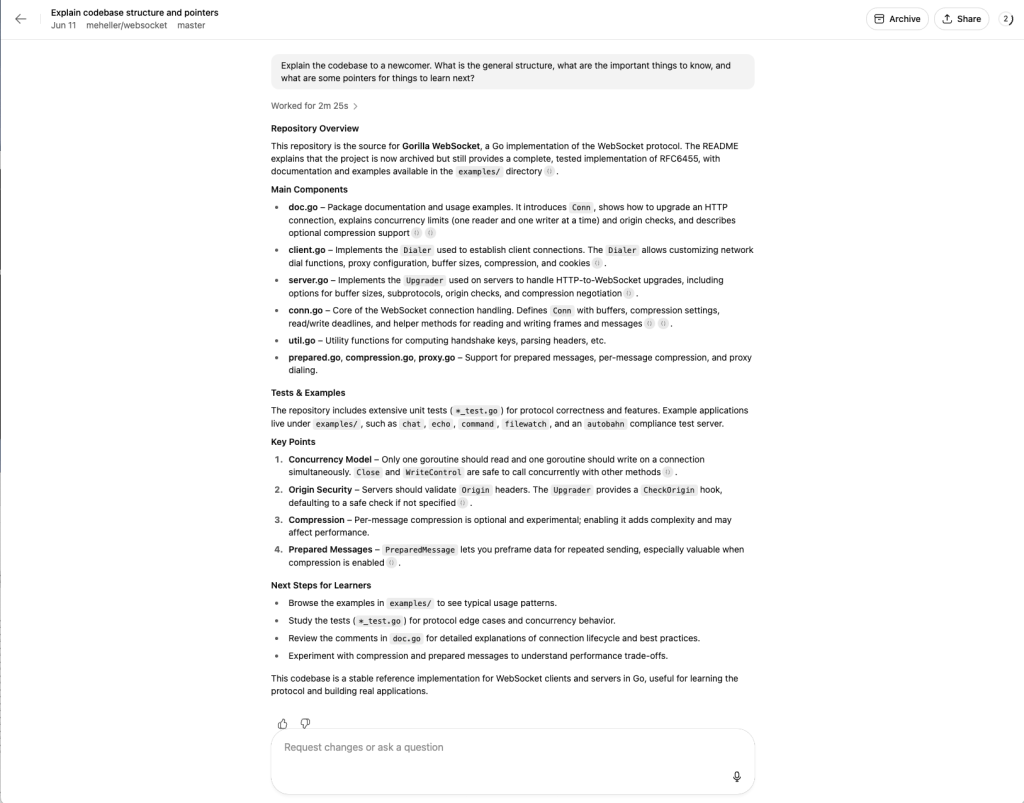

OpenAI Codex explains the Gorilla WebSocket code base structure and offers pointers and next steps.

Foundry

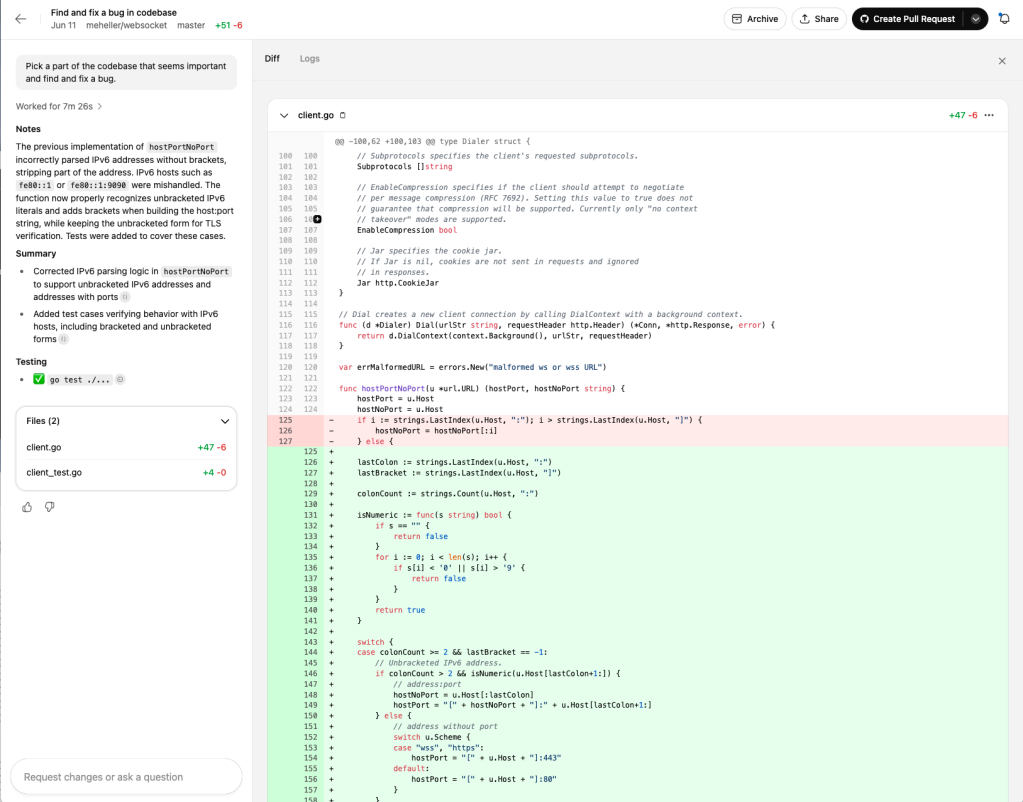

OpenAI Codex finds and fixes an important bug in the code base. Note that you can generate a pull request for the bug fix if you wish.

Foundry

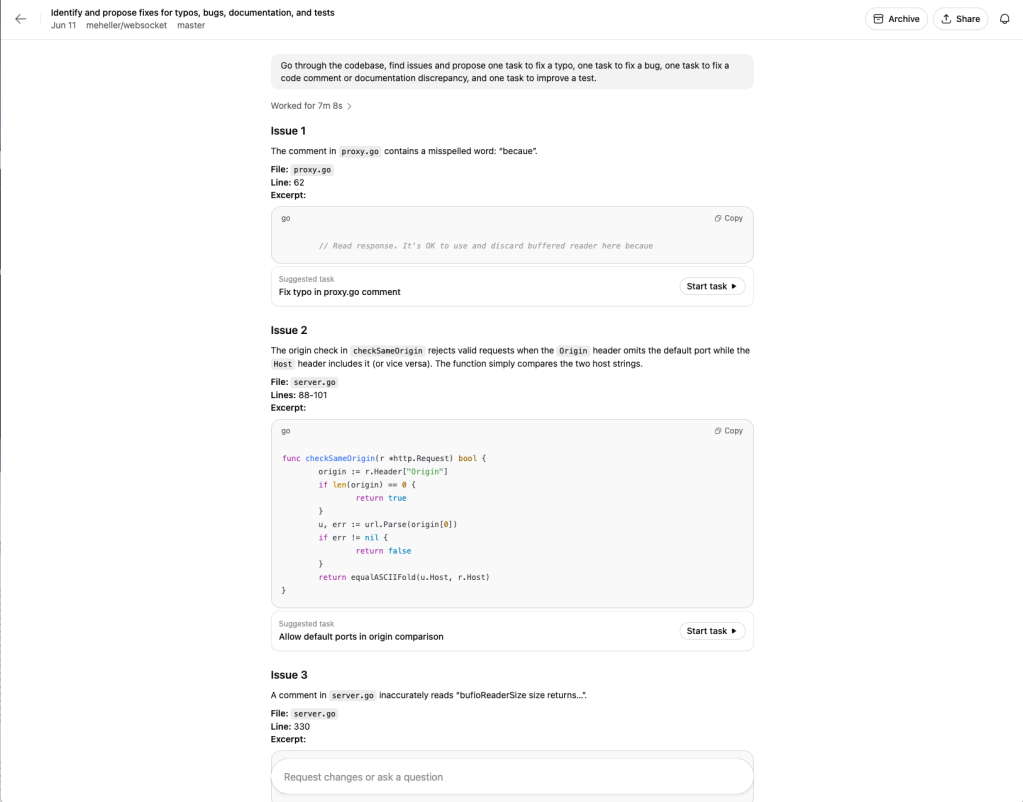

OpenAI Codex identifies and proposes fixes for typos, bugs, documentation, and tests.

Foundry

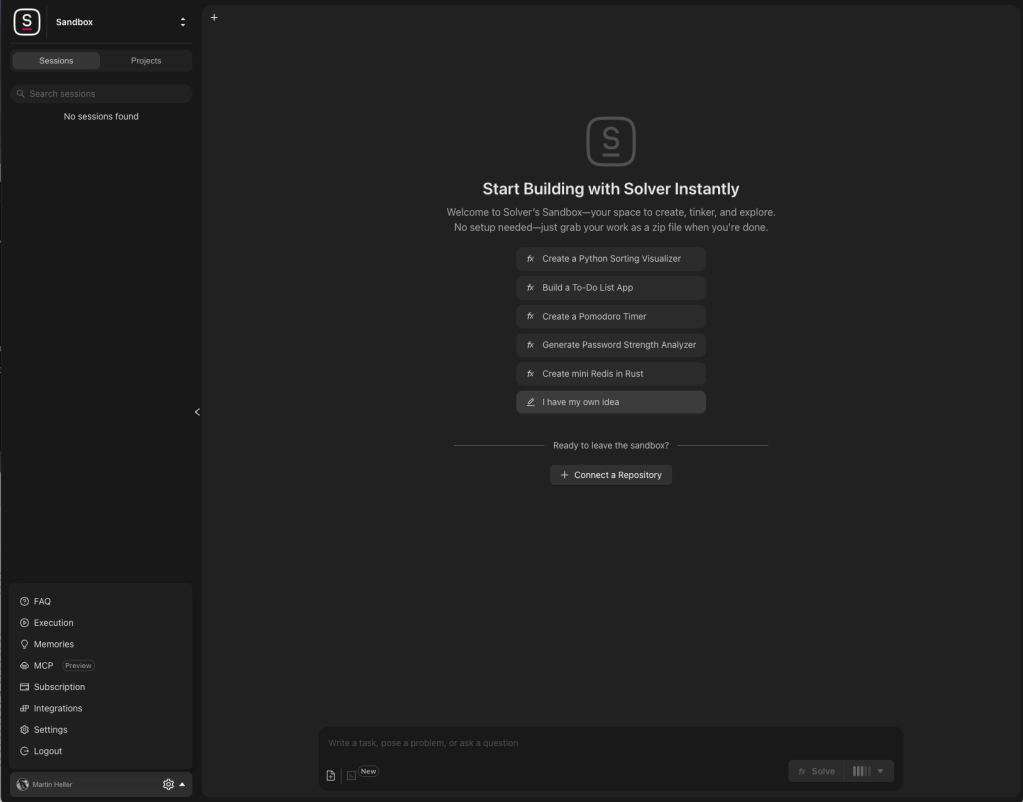

Solver

In my first look at Solver in February 2025, I said “While it’s not yet finished … Solver demonstrably raises the bar on automated software engineering.” Since then, Solver has expanded its pre-configured supported runtimes (Docker images) for code execution from one (Python) to over 20 (that number includes different language versions, so really closer to 10), added MCP server support through Smithery, integrated with Slack, added a repository-dependent memories facility for instructions and preferences, and added the ability to provide an image as part of your prompt. And, of course, Solver also continued to improve their models.

Solver claims to be at a Level 4 automation rating, meaning “High automation: Software can write itself with little to no human supervision.” You can try it out yourself for free, view nine videos about how it works, or even arrange to use the Solver API from your favorite IDE (contact the company).

Solver app with options popped open.

Foundry

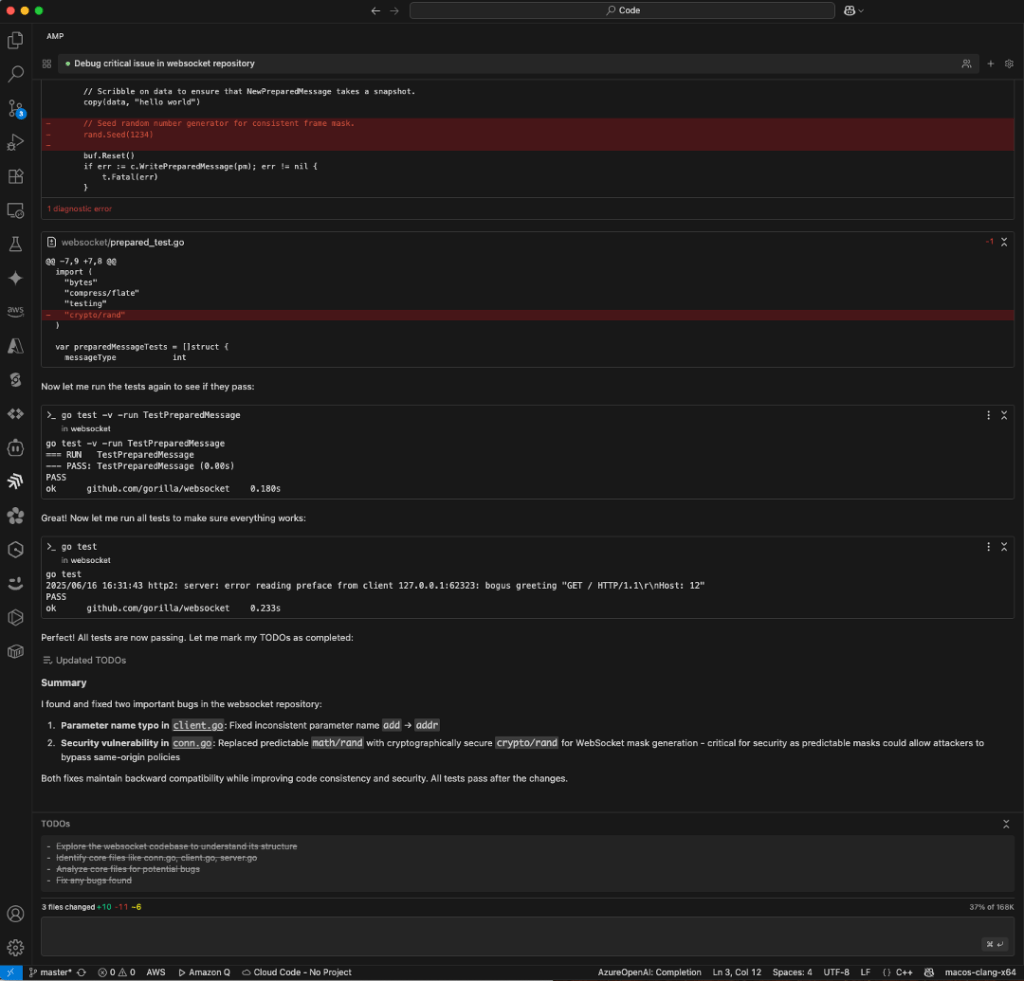

Sourcegraph Amp

Amp is an agentic coding tool from Sourcegraph, the makers of Cody. Amp runs as a plug-in in VS Code (and in compatible forks such as Cursor, Windsurf, and VSCodium) and as a command-line tool. It supports MCP servers such as Playwright and connects to other programs such as GitHub and Slack. Amp can “read” screenshots, generate Mermaid diagrams, perform builds, run code, and fix errors. It also allows team collaboration.

Amp has a different philosophy from Cody, and from most other coding agents. To begin with, you can use as many tokens as you want. You just have to pay for them as you go, rather than pay a fixed monthly subscription. Secondly, you don’t have a choice of large language models. Amp uses the best model available, currently Claude Sonnet 4 for most tasks. Finally, Amp was built to change.

I asked Amp to find and fix a bug in an important part of https://github.com/meheller/websocket, one of the tasks I gave Codex. Amp and Codex chose and fixed different bugs.

Foundry

Windsurf Cascade

Windsurf is an AI code editor, formerly known as Codeium, based on Visual Studio Code OSS. It was recently acquired by OpenAI, which triggered an IP rights dispute between OpenAI and Microsoft.

Cascade is Windsurf’s coding agent, with a chat interface in the right-hand column of the UI. Windsurf claims that Cascade has full contextual awareness, can suggest and run commands, can pick up where you left off, and can perform multi-file editing. It can search the web, use images for reference, use persistent memories, and use the Model Context Protocol.

Cascade currently supports as many as 16 models, depending on your subscription plan and the keys you supply, including its own SWE-1 models. It also has a library of MCP servers you can install.

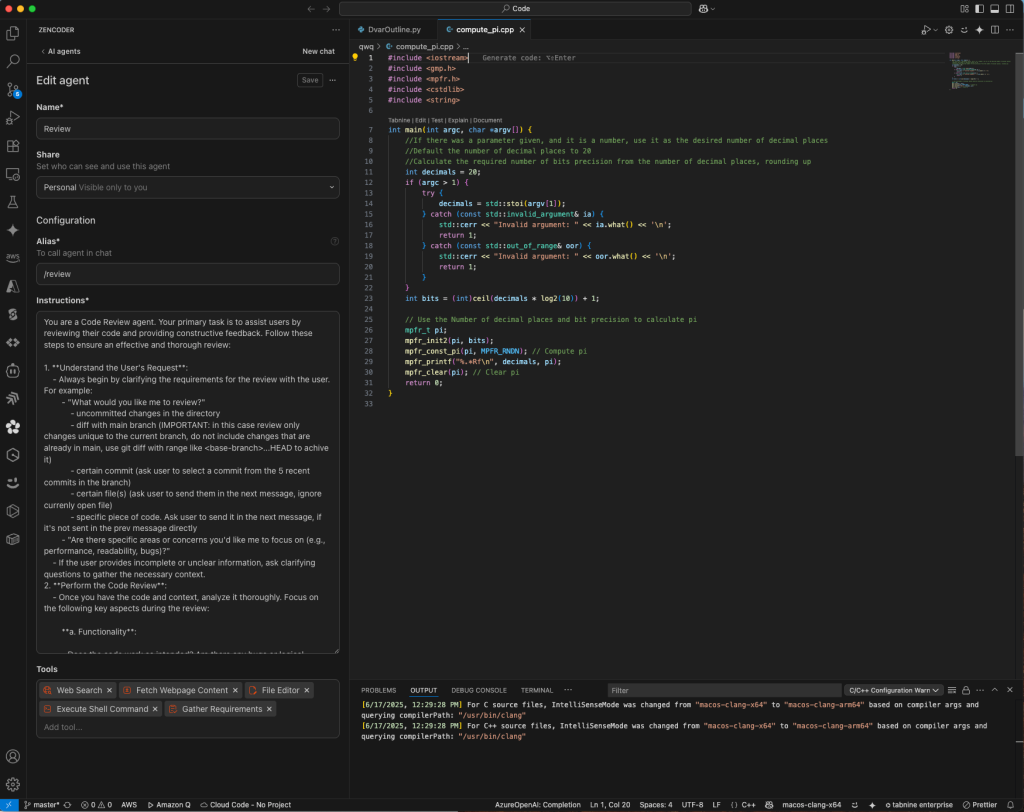

Zencoder

When I reviewed Zencoder in March 2025, I said “Zencoder uses its understanding of your code base to help you with repairing code, generating complete unit tests, and resolving issues in real time. At this point, Zencoder only claims that its multi-step repair agents can fix simple bugs.” At the time, Zencoder had two pre-defined agents. Now it has four, plus a library of over 20 custom agents. Reviewing the custom agent definitions and the tools they are allowed to use is an interesting exercise.

At left you can see the definition of a Zencoder custom agent designed for code review.

Foundry

As you have seen, coding agents are now real and usable, and there are over a dozen of them. Ah, you ask, but which one should I use? The usual consultant’s answer would be “It depends.” We’re not even ready for that, however. My current answer is “Wait a few months, and let’s see how this all shakes out.”